How to build a custom ChatGPT assistant for your documentation

Using vector embeddings for context

ChatGPT can be a helpful tool for development and learning. But only if it knows about the technology you're using.

I wanted ChatGPT to help me develop Hilla applications, but since it was released after 2021, instead of giving helpful answers, ChatGPT made up answers that had nothing to do with reality.

I also had the challenge that Hilla supports both React and Lit on the front end, and I want to ensure that the answers use the correct framework as context.

Here's how I built an assistant using the most up-to-date documentation as context for ChatGPT to give me relevant answers.

Key concept: embeddings

ChatGPT, like other LLMs, has a limited context size that needs to fit your question, relevant background information, and the answer. For example, gpt-3.5-turbo has a limit of 4096 tokens or roughly 3000 words. In order to get relevant answers to your questions, you need to include the most valuable parts of documentation in the prompt.

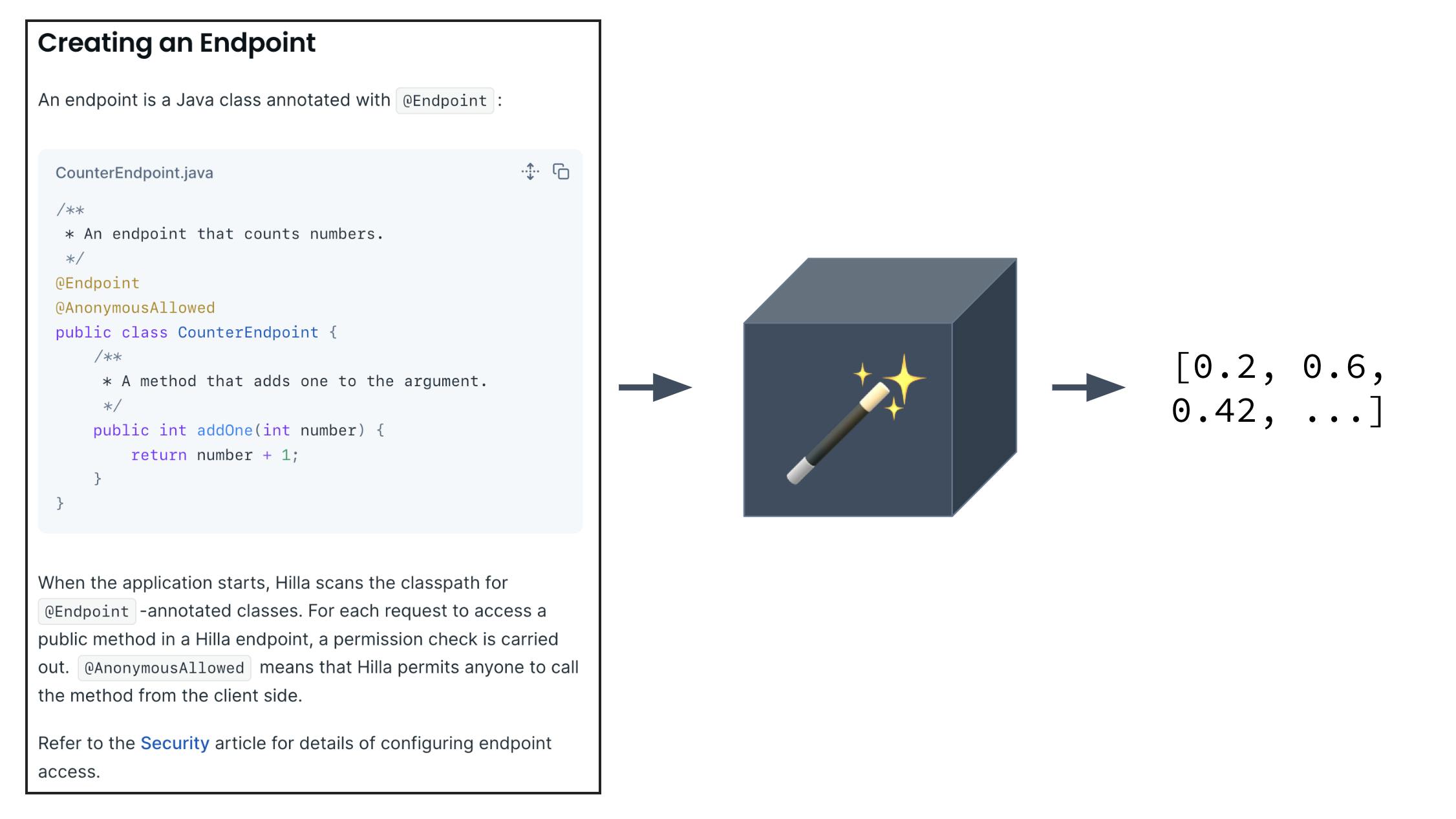

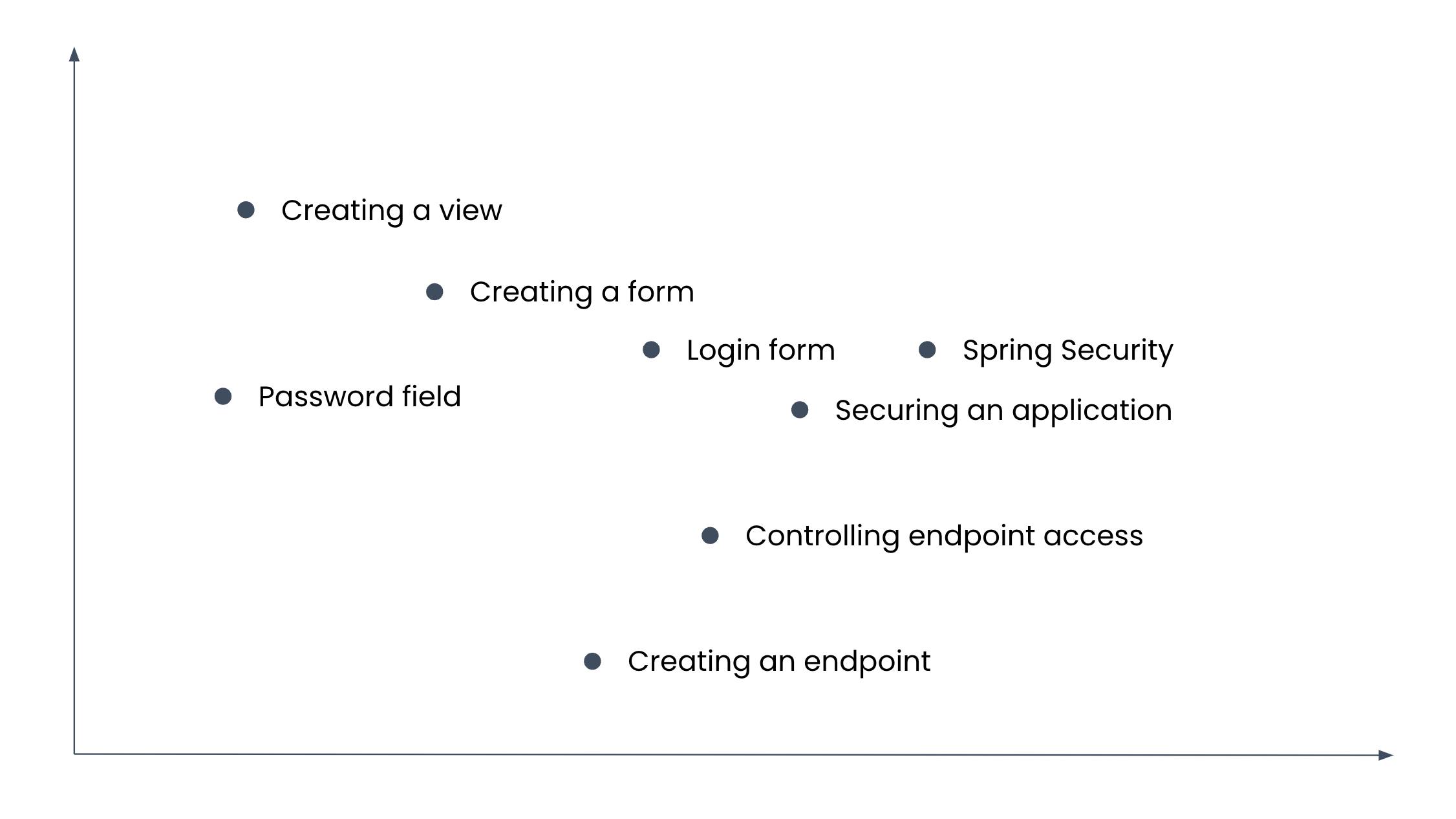

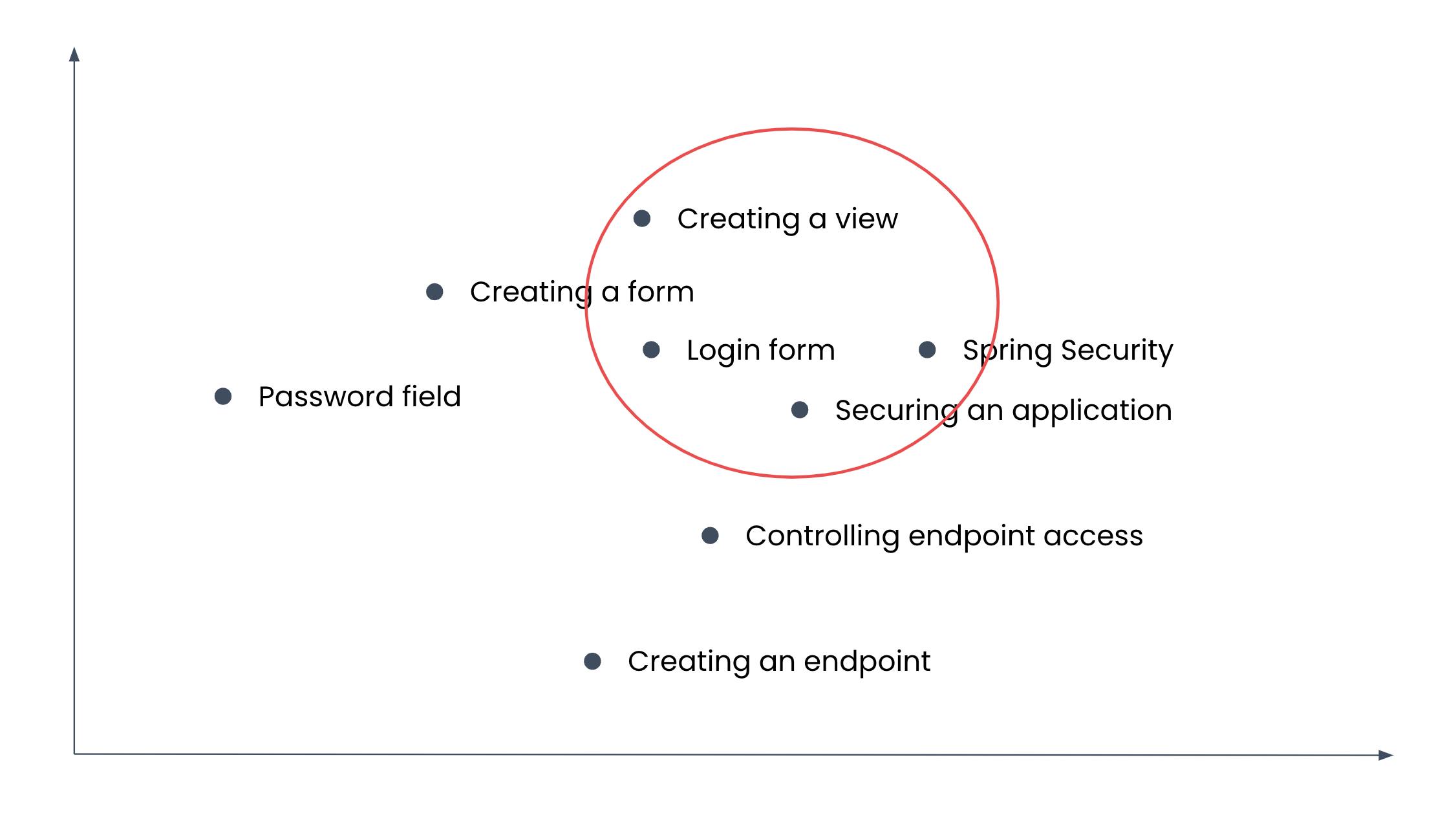

An efficient way of finding the most relevant parts of the documentation to include is embeddings. You can think of embeddings as a way of encoding the meaning of a piece of text into a vector that describes a point in a multi-dimensional space. Texts with similar meanings are close to each other, while texts that differ in meaning are farther apart.

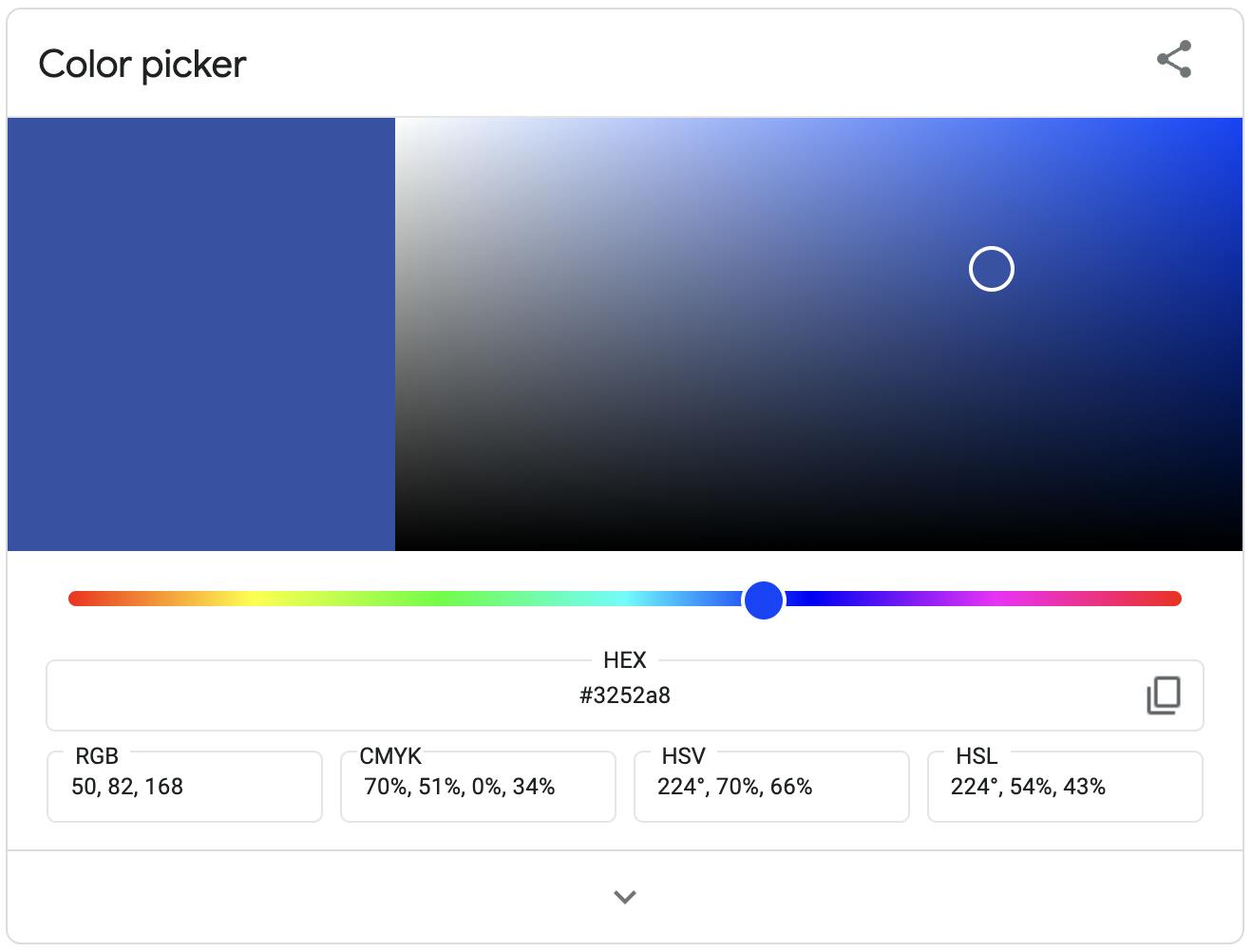

The concept is very similar to a color picker. Each color can be represented by a 3-element vector of red, green, and blue values. Similar colors have similar values, whereas different colors have different values.

For this article, it's enough to know that there's an OpenAI API that turns texts into embeddings. This article on embeddings is a good resource if you want to learn more about how embeddings work under the hood.

Once you have created embeddings for your documentation, you can quickly find the most relevant parts to include in the prompt by finding the parts closest in meaning to the question.

The big picture: providing documentation as context to ChatGPT

Here are the high-level steps needed to make ChatGPT use your documentation as context for answering questions:

Generating embeddings for your documentation

Split your documentation into smaller parts, for instance, by heading, and create an embedding (vector) for each chunk.

Save the embedding, source text, and other metadata in a vector database.

Answering questions with documentation as context

Create an embedding for the user question.

Search the vector database for the N parts of the documentation most related to the question using the embedding.

Construct a prompt instructing ChatGPT to answer the given question only using the provided documentation.

Use the OpenAI API to generate a completion for the prompt.

In the following sections, I will go into more detail about how I implemented these steps.

Tools used

Source code

I will only highlight the most relevant sections of the code below. You can find the complete source code on GitHub.

Processing documentation

The Hilla documentation is in Asciidoc format. The steps needed to process them into embeddings are as follows:

Process the Asciidoc files with Asciidoctor to include code snippets and other includes

Split the resulting document into sections based on the HTML document structure

Convert the content to plain text to save on tokens

If needed, split sections into smaller pieces

Create embedding vectors for each text section

Save the embedding vectors along with the source text into Pinecone

Asciidoc processing

async function processAdoc(file, path) {

console.log(`Processing ${path}`);

const frontMatterRegex = /^---[\s\S]+?---\n*/;

const namespace = path.includes('articles/react') ? 'react' : path.includes('articles/lit') ? 'lit' : '';

if (!namespace) return;

// Remove front matter. The JS version of asciidoctor doesn't support removing it.

const noFrontMatter = file.replace(frontMatterRegex, '');

// Run through asciidoctor to get includes

const html = asciidoctor.convert(noFrontMatter, {

attributes: {

root: process.env.DOCS_ROOT,

articles: process.env.DOCS_ARTICLES,

react: namespace === 'react',

lit: namespace === 'lit'

},

safe: 'unsafe',

base_dir: process.env.DOCS_ARTICLES

});

// Extract sections

const dom = new JSDOM(html);

const sections = dom.window.document.querySelectorAll('.sect1');

// Convert section html to plain text to save on tokens

const plainText = Array.from(sections).map(section => convert(section.innerHTML));

// Split section content further if needed, filter out short blocks

const docs = await splitter.createDocuments(plainText);

const blocks = docs.map(doc => doc.pageContent)

.filter(block => block.length > 200);

await createAndSaveEmbeddings(blocks, path, namespace);

}

Create embeddings and save them

async function createAndSaveEmbeddings(blocks, path, namespace) {

// OpenAI suggests removing newlines for better performance when creating embeddings.

// Don't remove them from the source.

const withoutNewlines = blocks.map(block => block.replace(/\n/g, ' '));

const embeddings = await getEmbeddings(withoutNewlines);

const vectors = embeddings.map((embedding, i) => ({

id: nanoid(),

values: embedding,

metadata: {

path: path,

text: blocks[i]

}

}));

await pinecone.upsert({

upsertRequest: {

vectors,

namespace

}

});

}

Get embeddings from OpenAI

export async function getEmbeddings(texts) {

const response = await openai.createEmbedding({

model: 'text-embedding-ada-002',

input: texts

});

return response.data.data.map((item) => item.embedding);

}

Searching with context

So far, we have split the documentation into small sections and saved them in a vector database. When a user asks a question, we need to:

Create an embedding based on the asked question

Search the vector database for the 10 parts of the documentation that are most relevant to the question

Create a prompt that includes as many documentation sections as possible into 1536 tokens, leaving 2560 tokens for the answer.

async function getMessagesWithContext(messages: ChatCompletionRequestMessage[], frontend: string) {

// Ensure that there are only messages from the user and assistant, trim input

const historyMessages = sanitizeMessages(messages);

// Send all messages to OpenAI for moderation.

// Throws exception if flagged -> should be handled properly in a real app.

await moderate(historyMessages);

// Extract the last user message to get the question

const [userMessage] = historyMessages.filter(({role}) => role === ChatCompletionRequestMessageRoleEnum.User).slice(-1)

// Create an embedding for the user's question

const embedding = await createEmbedding(userMessage.content);

// Find the most similar documents to the user's question

const docSections = await findSimilarDocuments(embedding, 10, frontend);

// Get at most 1536 tokens of documentation as context

const contextString = await getContextString(docSections, 1536);

// The messages that set up the context for the question

const initMessages: ChatCompletionRequestMessage[] = [

{

role: ChatCompletionRequestMessageRoleEnum.System,

content: codeBlock`

${oneLine`

You are Hilla AI. You love to help developers!

Answer the user's question given the following

information from the Hilla documentation.

`}

`

},

{

role: ChatCompletionRequestMessageRoleEnum.User,

content: codeBlock`

Here is the Hilla documentation:

"""

${contextString}

"""

`

},

{

role: ChatCompletionRequestMessageRoleEnum.User,

content: codeBlock`

${oneLine`

Answer all future questions using only the above

documentation and your knowledge of the

${frontend === 'react' ? 'React' : 'Lit'} library

`}

${oneLine`

You must also follow the below rules when answering:

`}

${oneLine`

- Do not make up answers that are not provided

in the documentation

`}

${oneLine`

- If you are unsure and the answer is not explicitly

written in the documentation context, say

"Sorry, I don't know how to help with that"

`}

${oneLine`

- Prefer splitting your response into

multiple paragraphs

`}

${oneLine`

- Output as markdown

`}

${oneLine`

- Always include code snippets if available

`}

`

}

];

// Cap the messages to fit the max token count, removing earlier messages if necessary

return capMessages(

initMessages,

historyMessages

);

}

When a user asks a question, we call getMessagesWithContext() to get the messages we need to send to ChatGPT. We then call the OpenAI API to get the completion and stream the response to the client.

export default async function handler(req: NextRequest) {

// All the non-system messages up until now along with

// the framework we should use for the context.

const {messages, frontend} = (await req.json()) as {

messages: ChatCompletionRequestMessage[],

frontend: string

};

const completionMessages = await getMessagesWithContext(messages, frontend);

const stream = await streamChatCompletion(completionMessages, MAX_RESPONSE_TOKENS);

return new Response(stream);

}

Source code

Acknowledgments

This post and demo app wouldn't have been possible without the excellent insights and resources below:

Hassan El Mghari's post on Building a GPT-3 app with Next.js and Vercel Edge Functions

Greg Richardson's Storing OpenAI embeddings in Postgres with pgvector and the implementation in the Supabase GitHub repo.